This is a companion article to the feature, “Automating Molecular LDTs.“

Completed in 2003 after an effort requiring 13 years and costing $3 billion, the Human Genome Project provided a starting point for the use of genetic data in the clinical diagnostics laboratory. One of the project’s outcomes has been the ongoing drive to reduce the time and costs required to perform DNA sequencing of an individual’s entire genome.

The National Human Genome Research Institute has been tracking the cost per genome since 2001. Currently, the cost per genome is estimated at approximately $1200, making achievement of the “$1000 genome” a near reality.

While the costs of both instruments and analysis have been declining, the capabilities of DNA sequencing techniques have been improving rapidly. The “massively parallel” methods of next-generation sequencing (NGS) are now able to detect thousands of genetic variants and provide a tremendous amount of data from a single NGS run. As the meaning of such individual variants becomes clearer, clinical laboratories can be expected to embrace the technology.

Today, NGS data can help to diagnose disease as well as to predict an individual’s risk of developing future medical conditions. In the field of oncology, NGS data have been used to inform physicians about the genetic makeup of an individual’s tumor and its sensitivity to specific drugs, thereby enabling targeted therapies to be more effective.

The pharmaceutical industry has also been aided by NGS data, which can help to identify appropriate biomarkers and to stratify patients being enrolled in clinical studies. As the clinical utility of NGS is further demonstrated, it should be expected that private and commercial payors will also support this exciting technology’s capability to provide meaningful data and improve clinical outcomes.

Figure 5. The University at Buffalo center for computational research provides sophisticated bioinformatics capabilities for interpreting the vast amounts of genomic data generated during whole-genome NGS runs. Photo courtesy University at Buffalo.

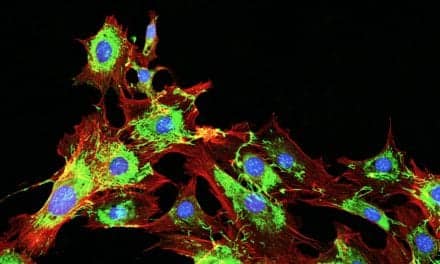

Despite the potential of NGS methods, however, efforts to implement NGS in clinical lab settings raise both “front-end” and “back-end” challenges. Back-end challenges concern interpretation of the vast amounts of data generated by NGS, which require laboratories to develop and maintain sophisticated bioinformatics capabilities (see Figure 5). Front-end challenges center on the need to first isolate the DNA and prepare it for analysis using “library preparation” methods.

To explore methods of streamlining DNA library preparation for NGS, Rheonix has embarked on a collaborative effort with the University at Buffalo’s genomics and bioinformatics core, located in the New York State Center of Excellence in Bioinformatics and Life Sciences. The effort takes advantage of the capability of the Rheonix Encompass Optimum workstation to control and automate processing steps performed on the company’s CARD cartridge, in order to streamline the library preparation methods used at the center.

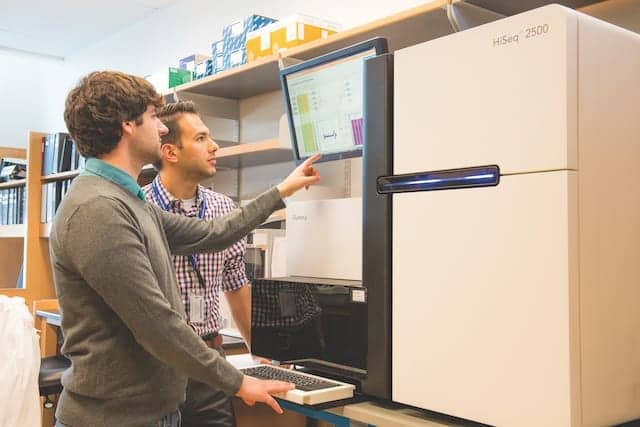

Figure 6. Bioinformatics analysts and lead programmers Jonathan E. Bard, MS (left), and Brandon J. Marzullo, MA, review data generated by the Illumina HiSeq 2500 NGS instrument in the New York State Center of Excellence in Bioinformatics and Life Sciences at the University at Buffalo. Photo courtesy University at Buffalo.

The manual procedure previously used at the NGS center required several pieces of equipment and took approximately 1.5 days to complete. Making use of the Rheonix platform, the researchers have been able to reduce the time required for library preparation to approximately 3 hours. Moreover, the DNA libraries prepared using the Rheonix platform, when sequenced on Illumina MiSeq instruments, have yielded NGS sequence data indistinguishable from the labor-intensive manual methods previously employed by the center (see Figure 6).

While development efforts are still under way, Rheonix anticipates being able to provide a commercial platform that will dramatically reduce the cost and time required to prepare DNA libraries for sequencing. By exploiting the power of the Rheonix technology, clinical labs will have an easy-to-use DNA library preparation process, and will be able to focus more of their attention on the data generated by NGS.