Summary: Recent research revealed that four proposed AI-driven image search engines for digital histopathology slides performed inadequately for routine clinical use.

Takeaways

- Some of the AI algorithms tested showed less than 50% accuracy for the search and retrieval of digital histopathology slides, rendering them unsuitable for clinical practice.

- There is a critical need for standardized testing protocols for AI algorithms in healthcare to ensure they meet the rigorous demands of clinical environments before adoption.

- Efforts are underway to develop new guidelines for AI validation and more reliable algorithms, addressing the current inconsistencies and errors in automated histopathology image retrieval systems.

Four proposed image search engines for automating the search and retrieval of digital histopathology slides were found to be of inadequate performance for routine clinical care, new research suggests.

The performance of the artificial intelligence algorithms to power the histopathology image databases was worse than expected, with some having less than 50% accuracy, which is not suitable for clinical practice, says Helen Shang, MD, MS, a third-year internal medicine resident and incoming hematology-oncology fellow at the David Geffen School of Medicine at UCLA.

“Currently, there are many AI algorithms being developed for medical tasks but there are fewer efforts directed on rigorous, external validations,” says Shang, who co-led the study with Dr. Mohammad Sadegh Nasr of the University of Texas at Arlington. “The field has also yet to standardize how AI algorithms should be best tested prior to clinical adoption.”

The paper is published in the peer-reviewed journal NEJM AI.

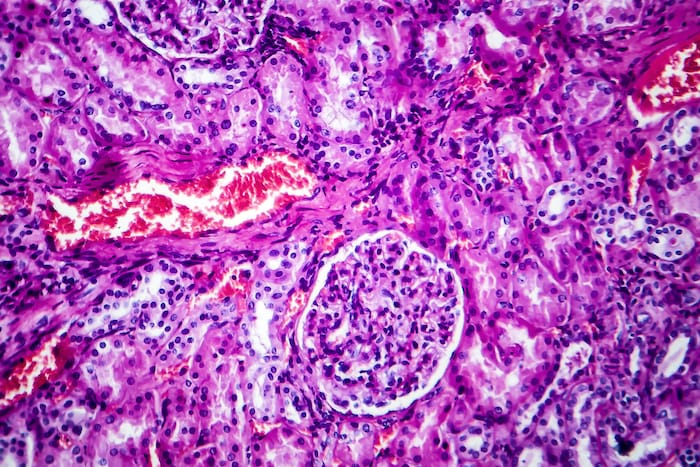

Improving the Search and Retrieval of Histopathology Images

As it now stands, pathologists manually search and retrieve histopathology images, which is very time consuming. As result, there has been growing interest in developing automated search and retrieval systems for the digitized cancer images.

The researchers designed a series of experiments to evaluate the accuracy of search engine results on tissue and subtype retrieval tasks on real-world UCLA cases and larger, unseen datasets. The four engines examined are Yottixel, SISH, RetCCL, HSHR. Each takes a different approach toward indexing, database generation, ranking and retrieval of images.

Improving on the Flawed AI Tools

Overall, the researchers found inconsistent results across the four algorithms – for instance, Yottixel performed best on breast tissue, while RetCCL had the highest performance on brain tissue. They also found that a group of pathologists found search engine results to be of low to average quality with several visible errors.

The researchers are devising new guidelines to standardize the clinical validation of AI tools, Shang says. They are also developing new algorithms that leverage a variety of different data types to develop more reliable and accurate predictions.

“Our studies show that despite amazing progress in artificial intelligence over the past decade, significant improvements are still needed prior to widespread uptake in medicine,” Shang says. “These improvements are essential in order to avoid doing patients harm while maximizing the benefits of artificial intelligence to society.”

Further reading: Histopathology-driven AI Predicts TMB-H Colorectal Cancer

The study’s additional authors are Chace Moleta, MD, and Jitin Makker, MBBS, MD, of UCLA, and, Jai Prakash Veerla, Jillur Rahman Saurav, Amir Hajighasemi, Parisa Boodaghi Malidarreh, Manfred Huber, and Jacob Luber, PhD of the University of Texas at Arlington.

The study was funded by the University of Texas System Rising STARs Award and the CPRIT First Time Faculty Award.