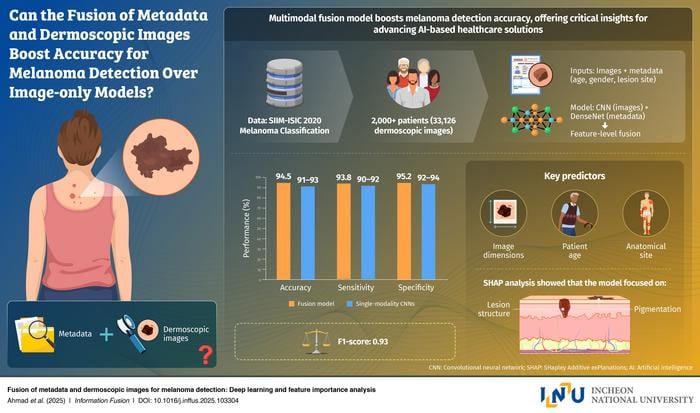

The deep learning system integrates dermoscopic images with patient metadata to improve diagnostic precision for skin cancer screening.

An international research team has developed an artificial intelligence (AI) model that detects melanoma with 94.5% accuracy by combining dermoscopic images with patient clinical data, potentially improving early skin cancer diagnosis in laboratory and clinical settings.

The AI system, created by researchers from Incheon National University in South Korea and collaborating institutions in the UK and Canada, integrates patient metadata such as age, gender, and lesion location with traditional dermoscopic imaging to enhance diagnostic precision beyond image-only approaches.

“Skin cancer, particularly melanoma, is a disease in which early detection is critically important for determining survival rates,” says professor Gwangill Jeon from the department of embedded systems engineering at Incheon National University and lead researcher on the study, in a release. “Since melanoma is difficult to diagnose based solely on visual features, I recognized the need for AI convergence technologies that can consider both imaging data and patient information.”

Multimodal Approach Outperforms Image-Only Models

The research team trained their AI model using the SIIM-ISIC melanoma dataset, which contains over 33,000 dermoscopic images paired with clinical metadata. The model achieved an F1-score of 0.94, outperforming popular image-only models such as ResNet-50 and EfficientNet.

The researchers conducted feature importance analysis to identify key diagnostic factors. Lesion size, patient age, and anatomical site were found to contribute significantly to accurate detection, providing transparency that could help clinicians understand and trust AI-generated diagnoses.

The study, made available online in June, will be published in Information Fusion in December.

Clinical Applications and Laboratory Integration

The model’s design addresses a gap in current AI diagnostic tools, which typically rely solely on dermoscopic images while overlooking crucial patient information that can improve diagnostic accuracy. This multimodal fusion approach could be integrated into laboratory workflows and diagnostic systems.

“The model is not merely designed for academic purposes. It could be used as a practical tool that could change real-world melanoma screening,” says Jeon in a release. “This research can be directly applied to developing an AI system that analyzes both skin lesion images and basic patient information to enable early detection of melanoma.”

Future applications could include smartphone-based diagnostic applications, telemedicine systems, or AI-assisted tools in dermatology clinics and laboratories, potentially reducing misdiagnosis rates and improving access to diagnostic services.

The research represents collaboration between Incheon National University, the University of West of England, Anglia Ruskin University, and the Royal Military College of Canada, highlighting the international scope of AI development in medical diagnostics.

Photo caption: A new deep learning system developed by an international research team detects melanoma with 94.5% accuracy by fusing dermoscopic images and patient metadata such as age, gender, and lesion location. The approach enhances diagnostic precision, transparency, and access to early skin cancer detection through smart healthcare technology.

Photo credit: Professor Gwangill Jeon from Incheon National University, Korea