While X-ray, MRI, and CT scans have transformed the practice of clinical medicine by eliminating the need for many exploratory surgical procedures to diagnose internal malignancies, imaging technologies to aid in skin cancer diagnosis has lagged. The current standard for diagnosing skin diseases, including skin cancer, relies on invasive biopsy followed by histopathological evaluation, which can lead to unnecessary skin biopsies and scars, multiple patient visits, and increased costs for the healthcare system. As one of the emerging noninvasive optical technologies for skin disease diagnosis, reflectance confocal microscopy (RCM) presents a biopsy-free, virtual histology potential solution to provide in vivo images of skin structure with cellular-level resolution. However, the output of RCM images is not in a format that dermatologists and pathologists are familiar with, and analyzing these images requires specialized training since RCM images are in black and white, lack nuclear features, and reveal different planes within skin tissue compared to standard histology.

Recently, a team of researchers at UCLA used deep learning framework to transform RCM images of intact skin, obtained without a biopsy, into images that appear like biopsied, histochemically stained skin sections imaged on microscope slides. They trained a convolutional neural network using the generative adversarial scheme to rapidly transform in vivo RCM images of unstained skin into virtually-stained volumetric images of H&E. This technique, which the team calls “virtual histology,” allows analysis of microscopic images of the skin, bypasses several standard steps used for medical diagnosis, including skin biopsy, tissue fixation, processing, sectioning, as well as histochemical staining.

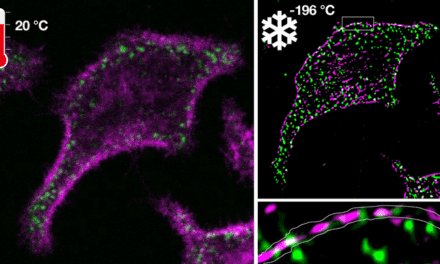

Published in Light: Science & Applications, a journal of the Springer Nature, this new 3D virtual staining framework can perform virtual histology on various skin conditions, including normal skin, basal cell carcinoma and melanocytic nevi with pigmented melanocytes, also covering different skin layers, including epidermis, dermal-epidermal junction and superficial dermis layers. The virtually-stained H&E images of unlabeled skin tissue showed similar color contrast and spatial features found in histochemically stained microscopic images of the biopsied tissue. This deep learning-powered virtual histology approach can eliminate invasive skin biopsies and allow diagnosticians to see the overall histological features of intact skin, without the need for chemical processing or labeling of tissue.

Featured Image: UCLA researchers have developed a technique called “virtual histology” that allows analysis of microscopic images of the skin and bypasses several standard steps used for medical diagnosis, including skin biopsy, tissue fixation, processing, sectioning, as well as histochemical staining. Illustration: Ozcan Lab @ UCLA.